Sign & Gesture Sprectrograph

Can we create a spectrograph for communicative movement? My Master's Thesis at UCSD Cognitive Science.

Towards a Manual Spectrograph

The audio spectrograph allowed researchers (and others) to visualize sounds and analyze language in a more quantified way. These visualizations allowed researchers to observe and study phenomena that were difficult to measure with the human ear. There is no cheap and user-friendly way to do such work studying the manual modality (gesture and sign languages).

Work supported by the UCSD Chancellor's Interdisciplinary Research Award, Jim Hollan's HCI Lab, Carol Padden's Sign Lab.

Gross Typographic Comparisons

With the Kinect and our software, researchers can easily capture huge amounts of information and notice general trends across participants or languages. This work was previously done frame-by-frame (see McNeill, 1992).

Meaningful Gestures

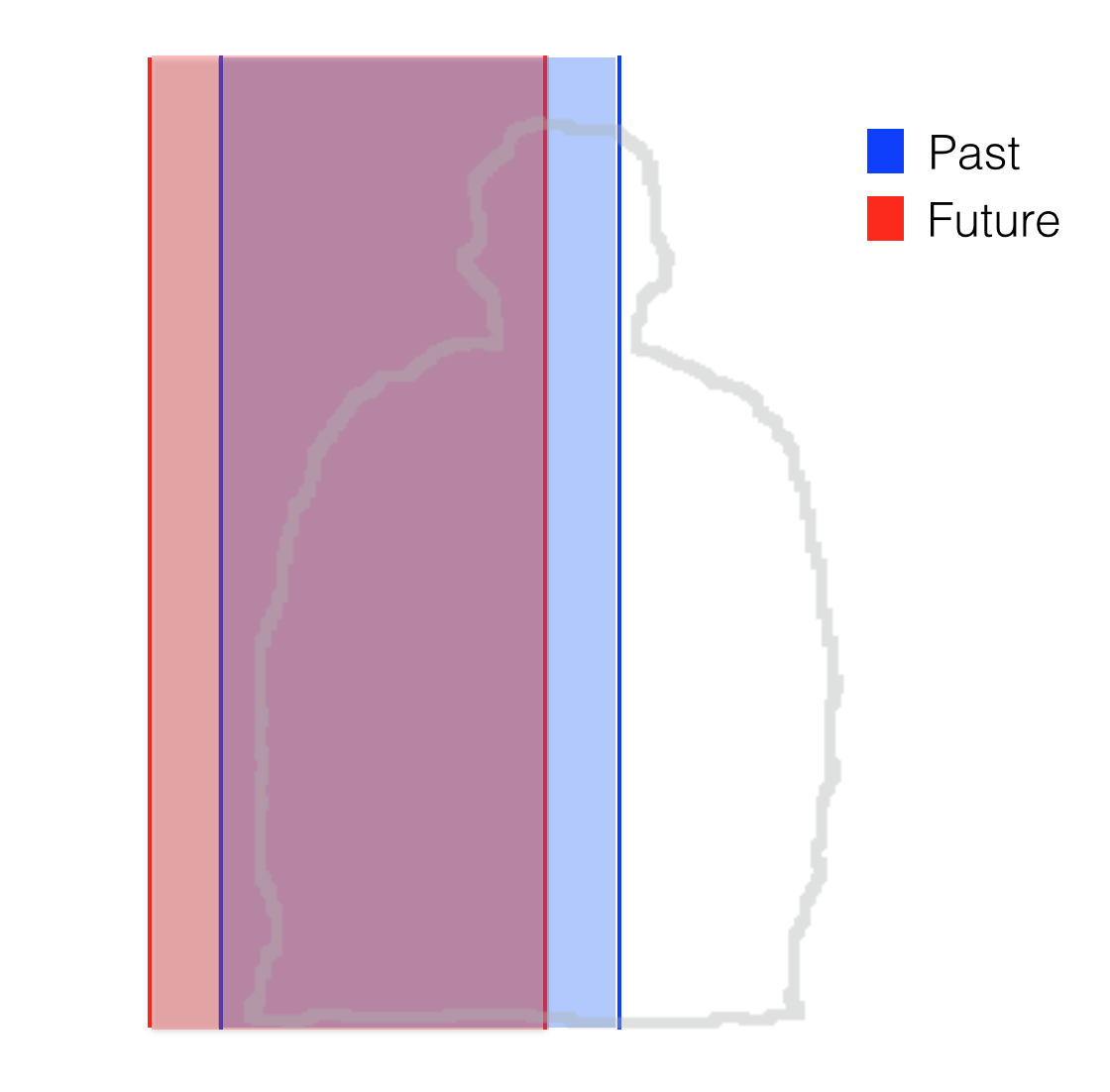

An experimental replication of Walker & Cooperrider (2015). I collected Kinect data of time-related gestures and replicated the previous results without coding by hand. With a heavily structured task and a large quantity of data, patterns reveal themselves without human supervision - gesture researchers can now utilize the various benefits of Big Data.

Kinect + Evolution of Gestures

Using my software and Tessa Verhoef's language evolution paradigm we conducted an in-depth exploration of measuring how gestures in a charades-type game environment evolve through generations. This paradigm is supposed to mimic the evolution of signed languages.

Namboodiripad, Lenzen, Lepic, & Verhoef (2016).

A ChronoViz Plugin

I integrated the visualizations into ChronoViz software, allowing for researchers to view just annotated segments that had been hand-coded.

Various other visualizations